如何在SpaCy模型中使用SHAP?

如何在SpaCy模型中使用SHAP?

提问于 2021-07-27 04:47:34

我试图通过使用SpaCy解释预测来提高SHAP二进制文本分类模型的可解释性。下面是我到目前为止尝试过的(下面是这教程):

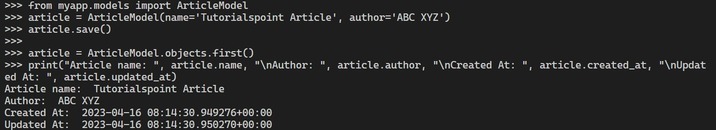

nlp = spacy.load("my_model") # load my model

explainer = shap.Explainer(nlp_predict)

shap_values = explainer(["This is an example"])但我得到了AttributeError: 'str' object has no attribute 'shape'。nlp_predict是我编写的一种方法,它以教程中使用的格式为每个文本获取文本和输出预测概率的列表。我在这里错过了什么?

这是我的格式化函数:

def nlp_predict(texts):

result = []

for text in texts:

prediction = nlp_fn(text) # This returns label probability but in the wrong format

sub_result = []

sub_result.append({'label': 'label1', 'score': prediction["label1"]})

sub_result.append({'label': 'label2', 'score': prediction["label2"]})

result.append(sub_result)

return(result)下面是他们在本教程中使用的预测格式(对于2个数据点):

[[{'label': 'label1', 'score': 2.850986311386805e-05},

{'label': 'label2', 'score': 0.9999715089797974}],

[{'label': 'label1', 'score': 0.00010622951958794147},

{'label': 'label2', 'score': 0.9998937845230103}]]我的函数的输出与此匹配,但我仍然得到了AttributeError。下面是我得到的全部错误信息:

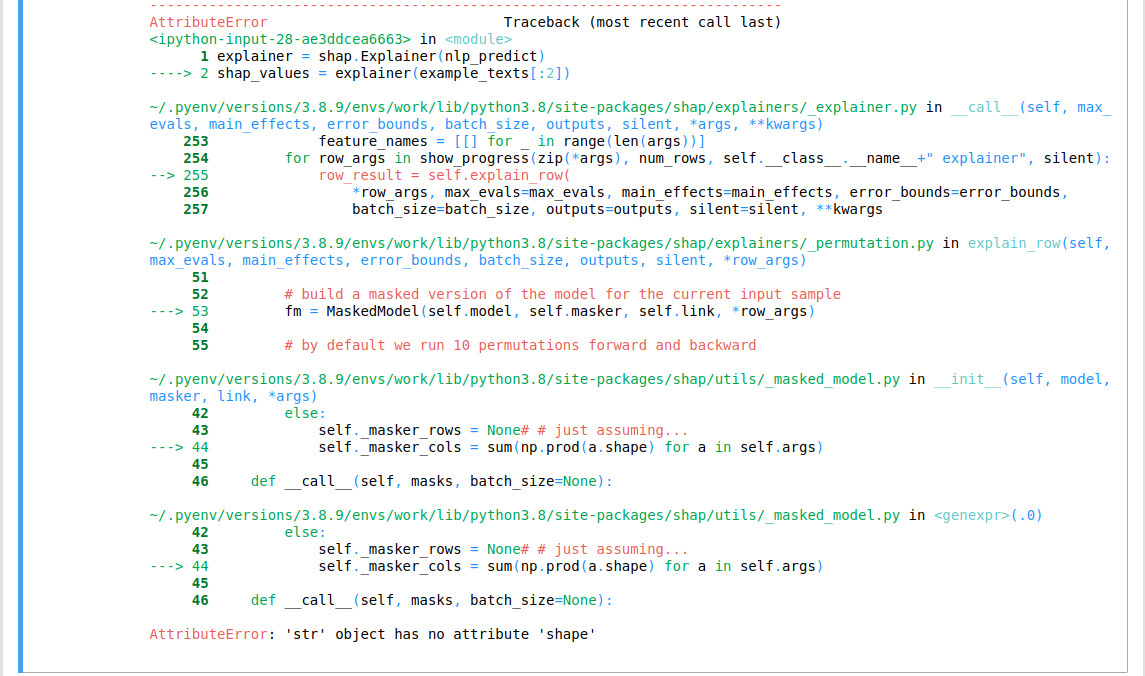

。

回答 1

Stack Overflow用户

发布于 2022-02-18 02:18:48

问题在于shap只为转换器库的令牌器和模型实现了方法。

SpaCy令牌程序的工作方式非常不同,特别是不返回令牌化结果的令牌ids。

因此,要完成这项工作,需要编写一个函数来包装spacy令牌器,以返回与转换器令牌器相同的数据(例如,[{'input_ids': [101, 7592, ...], 'offset_mapping': [(0, 5), (6, 9), ...], ...}]),或者在shap中添加对spacy令牌器/模型的支持。

这里有一个例子,我为前者黑出了一个解决方案。

- 用于预测/标记化的spacy模型的包装器:

import spacy

textcat_spacy = spacy.load("my-model")

tokenizer_spacy = spacy.tokenizer.Tokenizer(textcat_spacy.vocab)

# Run the spacy pipeline on some random text just to retrieve the classes

doc = textcat_spacy("hi")

classes = list(doc.cats.keys())

# Define a function to predict

def predict(texts):

# convert texts to bare strings

texts = [str(text) for text in texts]

results = []

for doc in textcat_spacy.pipe(texts):

# results.append([{'label': cat, 'score': doc.cats[cat]} for cat in doc.cats])

results.append([doc.cats[cat] for cat in classes])

return results

# Create a function to create a transformers-like tokenizer to match shap's expectations

def tok_adapter(text, return_offsets_mapping=False):

doc = tokenizer_spacy(text)

out = {"input_ids": [tok.norm for tok in doc]}

if return_offsets_mapping:

out["offset_mapping"] = [(tok.idx, tok.idx + len(tok)) for tok in doc]

return out- Shap更简单的配置:

import shap

# Create the Shap Explainer

# - predict is the "model" function, adapted to a transformers-like model

# - masker is the masker used by shap, which relies on a transformers-like tokenizer

# - algorithm is set to permuation, which is the one used for transformers models

# - output_names are the classes (altough it is not propagated to the permutation explainer currently, which is why plots do not have the labels)

# - max_evals is set to a high number to reduce the probability of cases where the explainer fails because there are too many tokens

explainer = shap.Explainer(predict, masker=shap.maskers.Text(tok_adapter), algorithm="permutation", output_names=classes, max_evals=1500)- 用法:

sample = "Some text to classify"

# Process the text using SpaCy

doc = textcat_spacy(sample)

# Get the shap values

shap_values = explainer([sample])页面原文内容由Stack Overflow提供。腾讯云小微IT领域专用引擎提供翻译支持

原文链接:

https://stackoverflow.com/questions/68545128

复制相关文章

点击加载更多

相似问题