并行wget下载文件没有正确退出。

并行wget下载文件没有正确退出。

提问于 2018-02-06 19:42:22

我正在尝试从包含链接(超过15 000+)的文件(000+)中下载文件。

我有这样的剧本:

#!/bin/bash

function download {

FILE=$1

while read line; do

url=$line

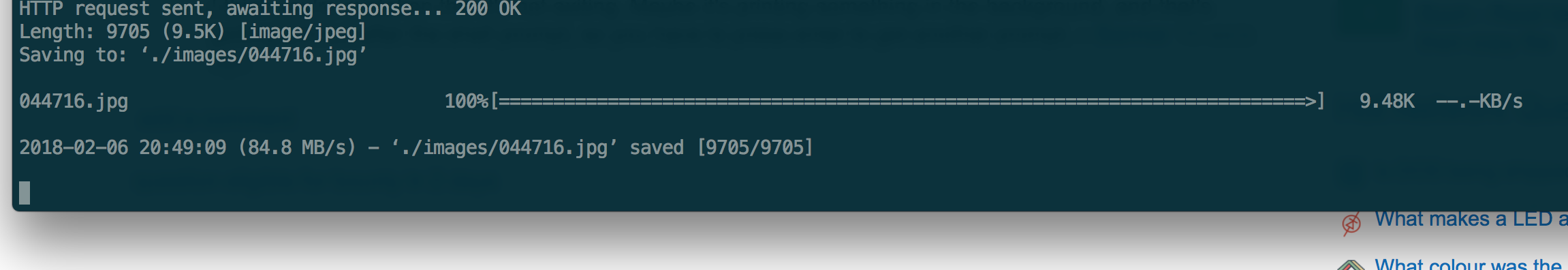

wget -nc -P ./images/ $url

#downloading images which are not in the test.txt,

#by guessing name: 12345_001.jpg, 12345_002.jpg..12345_005.jpg etc.

wget -nc -P ./images/ ${url%.jpg}_{001..005}.jpg

done < $FILE

}

#test.txt contains the URLs

split -l 1000 ./temp/test.txt ./temp/split

#read splitted files and pass to the download function

for f in ./temp/split*; do

download $f &

donetest.txt:

http://xy.com/12345.jpg

http://xy.com/33442.jpg

...我是拆分,将文件分成几个部分,并将wget进程去守护(download $f &),这样它就可以跳转到另一个包含链接的分裂文件中。

脚本是工作的,但脚本没有退出在末尾,我必须按enter在末尾。如果我将&从行download $f &中删除,它就能工作,但我不需要并行下载。

编辑:

正如我所发现的,这不是并行wget下载的最佳方式。使用GNU并行将是很好的。

回答 3

Stack Overflow用户

回答已采纳

发布于 2018-02-06 20:43:03

我可以向您推荐GNU并行吗?

parallel --dry-run -j32 -a URLs.txt 'wget -ncq -P ./images/ {}; wget -ncq -P ./images/ {.}_{001..005}.jpg'我只是猜测您的输入文件在URLs.txt中是什么样子,类似于:

http://somesite.com/image1.jpg

http://someothersite.com/someotherimage.jpg或者,使用您自己的方法对一个函数:

#/bin/bash

# define and export a function for "parallel" to call

doit(){

wget -ncq -P ./images/ "$1"

wget -ncq -P ./images/ "$2_{001..005}.jpg"

}

export -f doit

parallel --dry-run -j32 -a URLs.txt doit {} {.}Stack Overflow用户

发布于 2018-02-06 20:34:17

Stack Overflow用户

发布于 2018-02-06 20:09:51

- 请阅读wget手册页/帮助。

日志记录和输入文件:

-i,--input=文件下载URL,在本地或外部文件中找到.

-o, --output-file=FILE log messages to FILE.

-a, --append-output=FILE append messages to FILE.

-d, --debug print lots of debugging information.

-q, --quiet quiet (no output).

-v, --verbose be verbose (this is the default).

-nv, --no-verbose turn off verboseness, without being quiet.

--report-speed=TYPE Output bandwidth as TYPE. TYPE can be bits.

-i, --input-file=FILE download URLs found in local or external FILE.

-F, --force-html treat input file as HTML.

-B, --base=URL resolves HTML input-file links (-i -F)

relative to URL.

--config=FILE Specify config file to use.下载:

-nc,-no跳过下载到现有文件的下载(覆盖它们).

-t, --tries=NUMBER set number of retries to NUMBER (0 unlimits).

--retry-connrefused retry even if connection is refused.

-O, --output-document=FILE write documents to FILE.

-nc, --no-clobber skip downloads that would download to

existing files (overwriting them).

-c, --continue resume getting a partially-downloaded file.

--progress=TYPE select progress gauge type.

-N, --timestamping don't re-retrieve files unless newer than

local.

--no-use-server-timestamps don't set the local file's timestamp by

the one on the server.

-S, --server-response print server response.

--spider don't download anything.

-T, --timeout=SECONDS set all timeout values to SECONDS.

--dns-timeout=SECS set the DNS lookup timeout to SECS.

--connect-timeout=SECS set the connect timeout to SECS.

--read-timeout=SECS set the read timeout to SECS.

-w, --wait=SECONDS wait SECONDS between retrievals.

--waitretry=SECONDS wait 1..SECONDS between retries of a retrieval.

--random-wait wait from 0.5*WAIT...1.5*WAIT secs between retrievals.

--no-proxy explicitly turn off proxy.

-Q, --quota=NUMBER set retrieval quota to NUMBER.

--bind-address=ADDRESS bind to ADDRESS (hostname or IP) on local host.

--limit-rate=RATE limit download rate to RATE.

--no-dns-cache disable caching DNS lookups.

--restrict-file-names=OS restrict chars in file names to ones OS allows.

--ignore-case ignore case when matching files/directories.

-4, --inet4-only connect only to IPv4 addresses.

-6, --inet6-only connect only to IPv6 addresses.

--prefer-family=FAMILY connect first to addresses of specified family,

one of IPv6, IPv4, or none.

--user=USER set both ftp and http user to USER.

--password=PASS set both ftp and http password to PASS.

--ask-password prompt for passwords.

--no-iri turn off IRI support.

--local-encoding=ENC use ENC as the local encoding for IRIs.

--remote-encoding=ENC use ENC as the default remote encoding.

--unlink remove file before clobber. 页面原文内容由Stack Overflow提供。腾讯云小微IT领域专用引擎提供翻译支持

原文链接:

https://stackoverflow.com/questions/48650729

复制相关文章

相似问题