深入浅出思科VPP24.02系列:buffer模块vlib_buffer_main_init逻辑介绍-Part I

深入浅出思科VPP24.02系列:buffer模块vlib_buffer_main_init逻辑介绍-Part I

通信行业搬砖工

发布于 2024-10-25 13:22:09

发布于 2024-10-25 13:22:09

01=上期内容回顾

在往期的内容中,我们介绍了思科VPP软件初始化内存的核心函数vlib_stats_init()函数的业务逻辑介绍,

Ubuntu系统编译思科VPP24.02演示

Ubuntu系统运行VPP24.02系列:startup.conf配置文件解读

深入浅出思科VPP24.02系列:vlib_unix_main初始化介绍

深入浅出思科VPP24.02系列:thread0函数业务逻辑介绍

深入浅出思科VPP24.02系列:vlib_main函数业务逻辑介绍

深入浅出思科VPP24.02系列:内存初始化vlib_physmem_init逻辑介绍

深入浅出思科VPP24.02系列:日志模块vlib_log_init逻辑介绍

深入浅出思科VPP24.02系列:统计模块vlib_stats_init逻辑介绍

本期我们将继续深入浅出思科vpp24.02系列专题,介绍VPP的buffer模块的初始化的函数的业务逻辑介绍。

02=vlib_buffer_main_init函数介绍

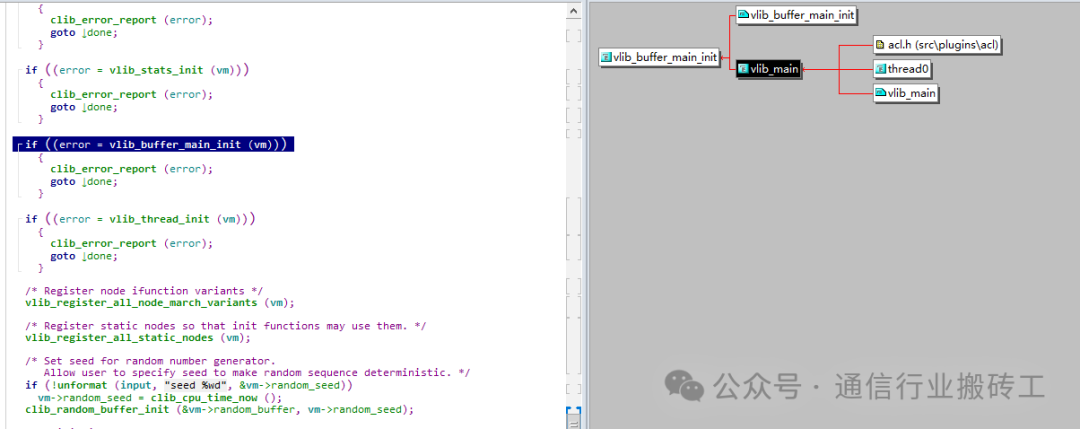

在往期的内容中,我们介绍了思科VPP软件对日志功能的初始化的函数vlib_buffer_main_init()的业务逻辑介绍,其在vlib_main()中备调用的。

其初始化逻辑代码如下所示:

clib_error_t *vlib_buffer_main_init (struct vlib_main_t * vm)

{

vlib_buffer_main_t *bm;

clib_error_t *err = 0;

clib_bitmap_t *bmp = 0, *bmp_has_memory = 0;

u32 numa_node;

vlib_buffer_pool_t *bp;

u8 *name = 0, first_valid_buffer_pool_index = ~0;

vlib_buffer_main_alloc (vm);

bm = vm->buffer_main;

bm->log_default = vlib_log_register_class ("buffer", 0);

bm->ext_hdr_size = __vlib_buffer_external_hdr_size;

clib_spinlock_init (&bm->buffer_known_hash_lockp);

bmp = os_get_online_cpu_node_bitmap ();

bmp_has_memory = os_get_cpu_with_memory_bitmap ();

if (bmp && bmp_has_memory)

bmp = clib_bitmap_and (bmp, bmp_has_memory);

/* no info from sysfs, assuming that only numa 0 exists */

if (bmp == 0)

bmp = clib_bitmap_set (bmp, 0, 1);

if (clib_bitmap_last_set (bmp) >= VLIB_BUFFER_MAX_NUMA_NODES)

clib_panic ("system have more than %u NUMA nodes",

VLIB_BUFFER_MAX_NUMA_NODES);

clib_bitmap_foreach (numa_node, bmp)

{

u8 *index = bm->default_buffer_pool_index_for_numa + numa_node;

index[0] = ~0;

if (bm->buffers_per_numa[numa_node] == 0)

continue;

if ((err = vlib_buffer_main_init_numa_node (vm, numa_node, index)))

{

clib_error_report (err);

clib_error_free (err);

continue;

}

if (first_valid_buffer_pool_index == 0xff)

first_valid_buffer_pool_index = index[0];

}

if (first_valid_buffer_pool_index == (u8) ~ 0)

{

err = clib_error_return (0, "failed to allocate buffer pool(s)");

goto done;

}

clib_bitmap_foreach (numa_node, bmp)

{

if (bm->default_buffer_pool_index_for_numa[numa_node] == (u8) ~0)

bm->default_buffer_pool_index_for_numa[numa_node] =

first_valid_buffer_pool_index;

}

vec_foreach (bp, bm->buffer_pools)

{

vlib_stats_collector_reg_t reg = { .private_data = bp - bm->buffer_pools };

if (bp->n_buffers == 0)

continue;

reg.entry_index =

vlib_stats_add_gauge ("/buffer-pools/%v/cached", bp->name);

reg.collect_fn = buffer_gauges_collect_cached_fn;

vlib_stats_register_collector_fn (®);

reg.entry_index = vlib_stats_add_gauge ("/buffer-pools/%v/used", bp->name);

reg.collect_fn = buffer_gauges_collect_used_fn;

vlib_stats_register_collector_fn (®);

reg.entry_index =

vlib_stats_add_gauge ("/buffer-pools/%v/available", bp->name);

reg.collect_fn = buffer_gauges_collect_available_fn;

vlib_stats_register_collector_fn (®);

}

done:

vec_free (bmp);

vec_free (bmp_has_memory);

vec_free (name);

return err;

}函数声明:clib_error_t *vlib_buffer_main_init (vlib_main_t *vm);

返回值:返回clib_error_t 类型给初始化模块。

03=函数工程意义分析

1、内存分配和初始化:使用vlib_buffer_main_alloc函数为vlib_buffer_main_t结构体分配内存。初始化日志系统、外部头部大小以及自旋锁。

vlib_buffer_main_t *bm;

clib_error_t *err = 0;

clib_bitmap_t *bmp = 0, *bmp_has_memory = 0;

u32 numa_node;

vlib_buffer_pool_t *bp;

u8 *name = 0, first_valid_buffer_pool_index = ~0;

vlib_buffer_main_alloc (vm);

bm = vm->buffer_main;

bm->log_default = vlib_log_register_class ("buffer", 0);

bm->ext_hdr_size = __vlib_buffer_external_hdr_size;

clib_spinlock_init (&bm->buffer_known_hash_lockp);调用vlib_buffer_main_alloc 函数,从heap上分配空间并且初始化,并且将data_size的默认值设置为2K。

void vlib_buffer_main_alloc (vlib_main_t * vm)

{

vlib_buffer_main_t *bm;

if (vm->buffer_main)

return;

vm->buffer_main = bm = clib_mem_alloc (sizeof (bm[0]));

clib_memset (vm->buffer_main, 0, sizeof (bm[0]));

bm->default_data_size = VLIB_BUFFER_DEFAULT_DATA_SIZE;

}2、NUMA节点处理:通过os_get_online_cpu_node_bitmap和os_get_cpu_with_memory_bitmap函数获取系统中CPU和带有内存的CPU节点位图。

bmp = os_get_online_cpu_node_bitmap ();

bmp_has_memory = os_get_cpu_with_memory_bitmap ();

if (bmp && bmp_has_memory)

bmp = clib_bitmap_and (bmp, bmp_has_memory);

/* 如果没有获取到这些信息,则假设只有NUMA节点0存在 */

if (bmp == 0)

bmp = clib_bitmap_set (bmp, 0, 1);

/* 检查NUMA节点数量是否超过最大限制,如果超过则触发panic */

if (clib_bitmap_last_set (bmp) >= VLIB_BUFFER_MAX_NUMA_NODES)

clib_panic ("system have more than %u NUMA nodes",

VLIB_BUFFER_MAX_NUMA_NODES);函数os_get_online_cpu_node_bitmap 逻辑展示:通过读取proc文件系统下的文件来实现。

__clib_export clib_bitmap_t *os_get_online_cpu_node_bitmap ()

{

#if __linux__

return clib_sysfs_read_bitmap ("/sys/devices/system/node/online");

#else

return 0;

#endif

}函数os_get_cpu_with_memory_bitmap 逻辑展示,通过读取proc文件系统下的文件来实现。

__clib_export clib_bitmap_t *os_get_cpu_with_memory_bitmap ()

{

#if __linux__

return clib_sysfs_read_bitmap ("/sys/devices/system/node/has_memory");

#else

return 0;

#endif

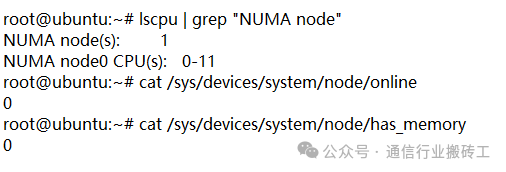

}在ubuntu中查询numa信息和上述两个proc文件的信息如下所示:

3、遍历为每个节点初始化缓冲区池索引,该步骤会通过调用初始化函数vlib_buffer_main_init_numa_node 来完成初始化。

clib_bitmap_foreach (numa_node, bmp)

{

u8 *index = bm->default_buffer_pool_index_for_numa + numa_node;

index[0] = ~0;

if (bm->buffers_per_numa[numa_node] == 0)

continue;

if ((err = vlib_buffer_main_init_numa_node (vm, numa_node, index)))

{

clib_error_report (err);

clib_error_free (err);

continue;

}

if (first_valid_buffer_pool_index == 0xff)

first_valid_buffer_pool_index = index[0];

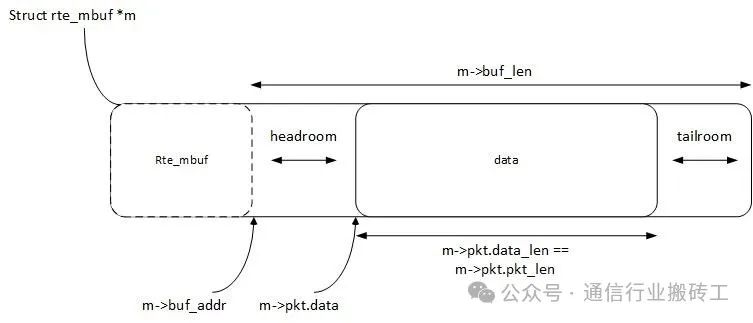

}在解释该部分代码逻辑之前,我们先介绍buffer相关的知识:dpdk收到的数据包用rte_mbuf结构描述。vpp为了兼容其它收包node(netmap,pcap等)改为使用vlib_buffer_t来描述数据包。

函数vlib_buffer_main_init_numa_node 逻辑介绍:

static clib_error_t *vlib_buffer_main_init_numa_node (struct vlib_main_t *vm, u32 numa_node,

u8 * index)

{

/* 从vm中获取buffer_main结构体的指针 */

vlib_buffer_main_t *bm = vm->buffer_main;

u32 physmem_map_index;

clib_error_t *error;

/* 当未配置page_size时,执行该逻辑 */

if (bm->log2_page_size == CLIB_MEM_PAGE_SZ_UNKNOWN)

{

/* 尝试使用默认值进行分配 CLIB_MEM_PAGE_SZ_DEFAULT_HUGE */

error = vlib_buffer_main_init_numa_alloc (vm, numa_node,

&physmem_map_index,

CLIB_MEM_PAGE_SZ_DEFAULT_HUGE,

0 /* unpriv */ );

if (!error)

goto buffer_pool_create;

/* If alloc failed, retry without hugepages */

vlib_log_warn (bm->log_default,

"numa[%u] falling back to non-hugepage backed "

"buffer pool (%U)", numa_node, format_clib_error, error);

clib_error_free (error);

error = vlib_buffer_main_init_numa_alloc (vm, numa_node,

&physmem_map_index,

CLIB_MEM_PAGE_SZ_DEFAULT,

1 /* unpriv */ );

}

else /* 如果在配置page_size的场景下,使用配置的值进行分配 */

error = vlib_buffer_main_init_numa_alloc (vm, numa_node,

&physmem_map_index,

bm->log2_page_size,

0 /* unpriv */ );

if (error)

return error;

buffer_pool_create:

/* 使用vlib_buffer_pool_create函数创建一个新的缓冲区池。

* 这个函数需要缓冲区数据的大小(通过vlib_buffer_get_default_data_size获取)、

* 物理内存映射索引(之前分配内存时得到的)、以及一个格式化的名称)。

*/

*index =

vlib_buffer_pool_create (vm, vlib_buffer_get_default_data_size (vm),

physmem_map_index, "default-numa-%d", numa_node);

/* 如果创建的缓冲区池索引为(u8) ~ 0(即255,表示无符号8位整数的最大值),则表示达到了最大缓冲区池数量,此时函数返回一个错误 */

if (*index == (u8) ~ 0)

error = clib_error_return (0, "maximum number of buffer pools reached");

return error;

}在函数vlib_buffer_main_init_numa_alloc 的逻辑:

static clib_error_t *

vlib_buffer_main_init_numa_alloc (struct vlib_main_t *vm, u32 numa_node,

u32 * physmem_map_index,

clib_mem_page_sz_t log2_page_size,

u8 unpriv)

{

vlib_buffer_main_t *bm = vm->buffer_main;

u32 default_buffers_per_numa = bm->default_buffers_per_numa;

u32 buffers_per_numa = bm->buffers_per_numa[numa_node];

clib_error_t *error;

u32 buffer_size;

uword n_pages, pagesize;

u8 *name = 0;

ASSERT (log2_page_size != CLIB_MEM_PAGE_SZ_UNKNOWN);

pagesize = clib_mem_page_bytes (log2_page_size);

buffer_size = vlib_buffer_alloc_size (bm->ext_hdr_size,

vlib_buffer_get_default_data_size

(vm));

if (buffer_size > pagesize)

return clib_error_return (0, "buffer size (%llu) is greater than page "

"size (%llu)", buffer_size, pagesize);

if (default_buffers_per_numa == 0)

default_buffers_per_numa = unpriv ?

VLIB_BUFFER_DEFAULT_BUFFERS_PER_NUMA_UNPRIV :

VLIB_BUFFER_DEFAULT_BUFFERS_PER_NUMA;

if (buffers_per_numa == ~0)

buffers_per_numa = default_buffers_per_numa;

name = format (0, "buffers-numa-%d%c", numa_node, 0);

n_pages = (buffers_per_numa - 1) / (pagesize / buffer_size) + 1;

error = vlib_physmem_shared_map_create (vm, (char *) name,

n_pages * pagesize,

min_log2 (pagesize), numa_node,

physmem_map_index);

vec_free (name);

return error;

}在这个函数式里面计算了page size和buffer_size以及n_pages的数值:

pagesize = clib_mem_page_bytes (log2_page_size);

buffer_size = vlib_buffer_alloc_size (bm->ext_hdr_size,

vlib_buffer_get_default_data_size

(vm));

if (buffer_size > pagesize)

return clib_error_return (0, "buffer size (%llu) is greater than page "

"size (%llu)", buffer_size, pagesize);

name = format (0, "buffers-numa-%d%c", numa_node, 0);

n_pages = (buffers_per_numa - 1) / (pagesize / buffer_size) + 1;在我们申请的大页上分配空间

n_pages = (buffers_per_numa - 1) / (pagesize / buffer_size) + 1;

error = vlib_physmem_shared_map_create (vm, (char *) name,

n_pages * pagesize,

min_log2 (pagesize), numa_node,

physmem_map_index);该部分分配的可以通过 show buffer命令读取配置:

static clib_error_t *

show_buffers (vlib_main_t *vm, unformat_input_t *input,

vlib_cli_command_t *cmd)

{

vlib_cli_output (vm, "%U", format_vlib_buffer_pool_all, vm);

return 0;

}

VLIB_CLI_COMMAND (show_buffers_command, static) = {

.path = "show buffers",

.short_help = "Show packet buffer allocation",

.function = show_buffers,

};下一章节,我们将继续介绍 vlib_buffer_pool_create 的业务逻辑

作者简介

作者:通信行业搬砖工

前深圳某大型通信设备厂商从业人员

前H3Com骨干网核心交换路由器人员

前某全球500强通信公司通信砖家

本文参与 腾讯云自媒体同步曝光计划,分享自微信公众号。

原始发表:2024-10-24,如有侵权请联系 cloudcommunity@tencent.com 删除

评论

登录后参与评论

推荐阅读